On The Sufficiency of The MNIST Dataset

In our first lecture on machine learning, we’ve most likely learned that machines can classify hand-written digits effectively, sometimes even better than humans do. Progressing through the course, we will realize that the “hand-written digits” the lecturer is referring to, is the well known MNIST dataset. As an introductory dataset in the image classification problem, our journey in the machine learning has been accompanied by the MNIST. However, as of 2019, the use of MNIST may not be sufficient to properly demonstrate the classification performance over the other baseline models.

In this article, I will briefly introduce the MNIST dataset and why is it good for the beginners. Then, I will discuss some problems with the MNIST dataset, which might have a hard time to convince the performance of your model. In the end, some alternatives will be suggested.

What is MNIST?

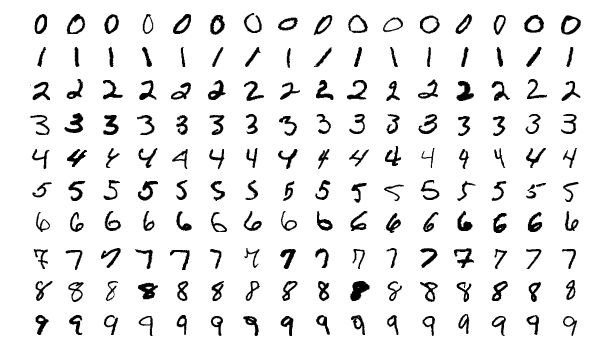

The MNIST dataset is a large dataset of images of handwritten digits(0-9) that is commonly used for training various image classification algorithms. It consists of 60,000 images in the training set and 10,000 in the test set distributed equally across 10 digits.

Why is it good for entry-level ML?

There are several reasons why MNIST is good for beginners who want to get their hands dirty. To name a few:

- Computation efficiency: The entire training set is merely 9 megabytes, which can perfectly fit in any modern computers, desktop or laptops.

- Many resources available: There are a large a mount of resources (GitHuub repos, blogs, tutorials, publications) using MNIST.

- Easy to train and test: We will get back to it in the following section..

Why is it insufficient for cutting-edge research?

You might see all kinds of arguments online on the dataset regarding its sufficiency. Essentially, it all comes down to one thing: It cannot represent modern computer vision tasks.

In comparison to other image datasets, MNIST has the following significant advantage:

- Shape: The shape of each image is relatively small .

- Grayscale: The MNIST images are all in grayscale, reducing its complexity to .

- Alignment: All images are perfectly aligned such that their center of mass is right in the center of the image.

- Balance: The number of samples for each category is fixed for both the training set and test set.

The simplicity of the MNIST dataset enables simpler methods to have higher test set accuracy. The table below is part of the leaderboard from here. By looking at the leaderboard, an interesting observation can be made: simpler models can sometimes achieve high performance on the test set. For example, the test set accuracy of a k-NN model with non-linear deformation is comparable to that of a deep neural network(99.46% vs 99.47%)! What does that tell us, a k-NN model with some fancy pre-processing techniques is comparable to a deep neural network? Certainly, no. The problem here is the simplicity of the MNIST dataset. With this kind of simplicity, the models do not need to be too complex to fit the distribution of the dataset almost perfectly. While using a complex machine learning model may help, it would certainly overfit the dataset like crazy, if not merely increase the test set accuracy by a fraction of percentage point. Indeed, increasing the complexity of the model may result in a better test set accuracy, but the side effect of doing so is the madness of overfitting, which could take a long time to tune.

| CLASSIFIER | PREPROCESSING | TEST SET ACCURACY(%) |

|---|---|---|

| K-NN with non-linear deformation (IDM) | shiftable edges | 99.46% |

| large conv. net, unsup pretraining [no distortions] | none | 99.47% |

Imagine that you, my reader, are designing a final exam paper for a class of students. The best strategy is to distribute the percentage of simple, medium and hard questions into 7:2:1 ratio. What happens if you distribute the questions into the ratio of 10:0:0? The distribution of the students’ results would be very skewed to the full mark. On some questions, especially the ambiguous one, smart students may lose more marks than the rest of the class because they are too smart and they overcomplicate the problem.

This example brings me to the next point, that is, modern deep learning models may not achieve high performance in MNIST. If you skim through the GitHub repository that contains people’s projects on training a deep neural network with MNIST, you’ll find that the classification results by common neural network architectures tend to fluctuate between 99% and 99.8%, while their top-1 error rates on ImageNet differs. What that means is that the aforementioned networks have reached a point, where the pre-processing and data augmentation techniques plays a more important role than the network architectures themselves. Examples includes ResNet1,3, ResNext3 and CapsuleNet2,3 .

The alternatives

With that being said, although MNIST is insufficient to show the superiority of your idea, using it in your research does not invalidate your proposed method. There are some good alternatives to the MNIST:

- FASHION-MNIST is designed to be a dropped-in replacement to the MNIST.

- EMNIST extends the MNIST dataset to letters and digits.

1https://zablo.net/blog/post/using-resnet-for-mnist-in-pytorch-tutorial/index.html